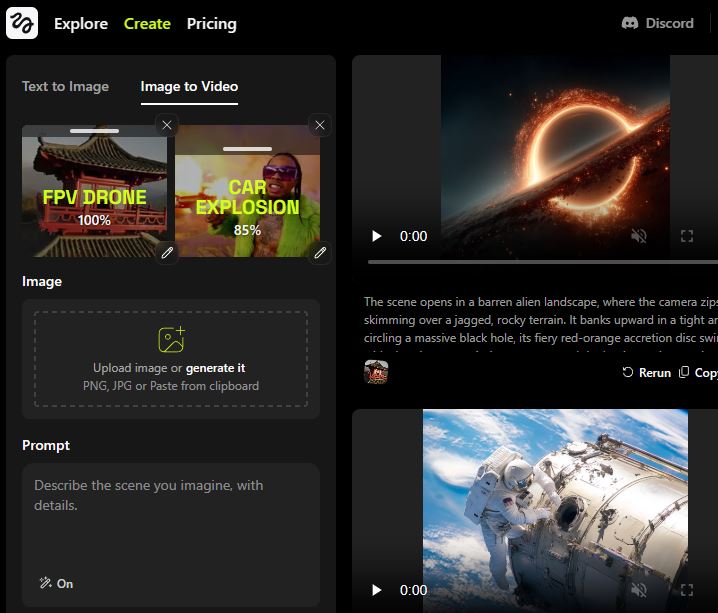

Higgsfield already managed to change the AI video generation game a short while ago. It has stunning effects you can apply to your videos to get incredible videos not possible with other tools. You can now use the mix feature to use multiple effects. For example, you can combine the FPV drone and car explosion effects.

Now, camera work isn’t limited by physics.

Introducing Higgsfield Mix – combine multiple motion controls in a single shot, including moves that aren’t possible with real cameras.

Also dropping 10 new motion controls built for speed, tension, and cinematic impact.

🧩 1/n pic.twitter.com/YpphhAkBid

— Higgsfield AI 🧩 (@higgsfield_ai) April 10, 2025

You will just have to upload your image to get started. For the effects, you can choose how strongly you want them to apply.

[HT]