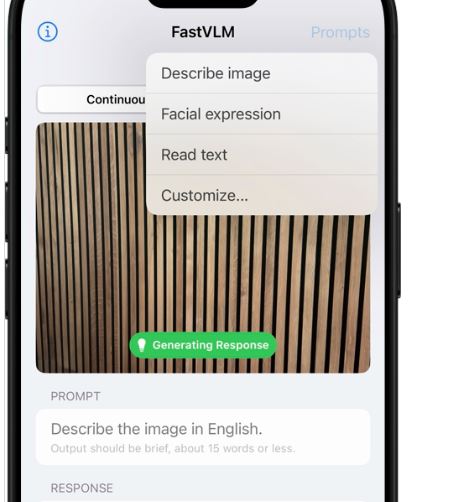

Apple is going to introduce its AI glasses in a couple of years. Naturally, its researchers are working on AI vision models. Take FastVLM for instance: it is a vision language model with efficient vision encoding. It achieves 3.2x faster performance in comparison to similar models. It is also 3.4x smaller.

FastViTHD is a hybrid vision encoder that outputs fewer tokens and reduces encoding time for high resolution images. There is a sample app available for you that lets you test the performance of this model. It will come with a bunch of prompts built-in. You can find this on GitHub.

[HT]