These days, there are plenty of AI models that are good at a lot of things, including coding, writing, and reasoning. Even the open source ones are quickly catching up to proprietary models. MiniMax-M1 is an open source AI model with 456B parameters, 1M token context length, and 25% reduced compute costs in comparison to DeepSeek R1.

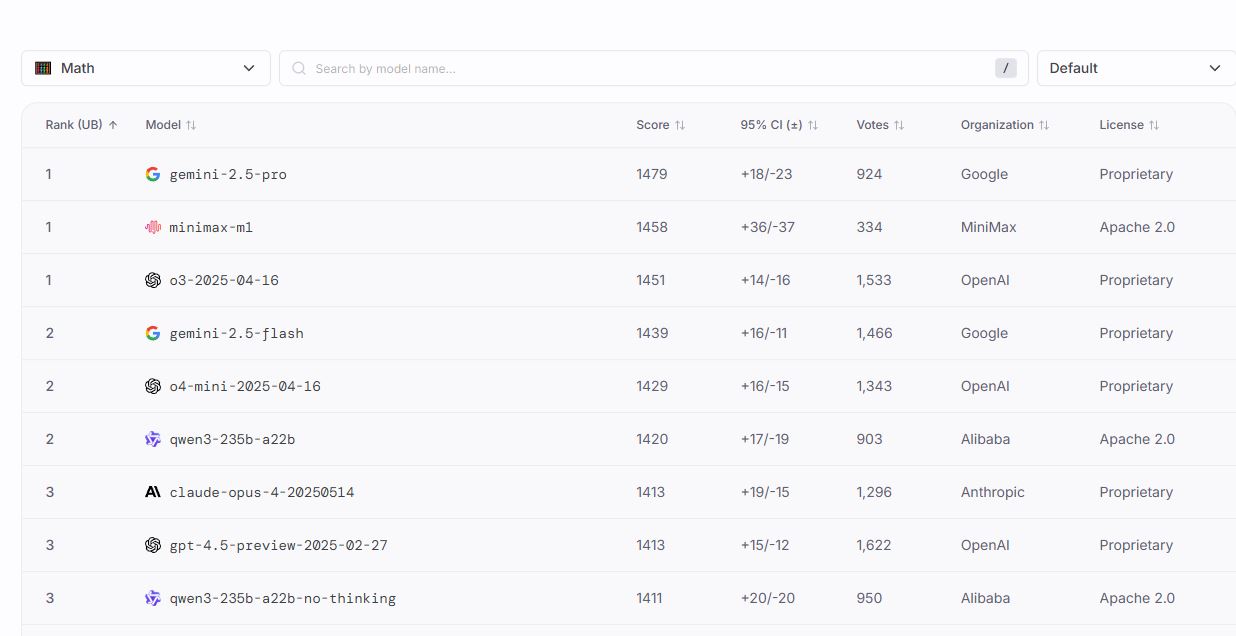

🚀 Excited to share that MiniMax-M1 is now live on the Text Arena leaderboard at #12! And has climbed to #1 in math! 🎉

As the open-source hybrid MoE model with the world’s longest context window, we’re pushing boundaries in long-context reasoning and agentic tool use.💪 https://t.co/hNoVHIk6Km— MiniMax (official) (@MiniMax__AI) July 2, 2025

This model has a hybrid attention mechanism. It consumes only 25% FLOPs compared to DeepSeek R1 at 100k token generation.

[HT]