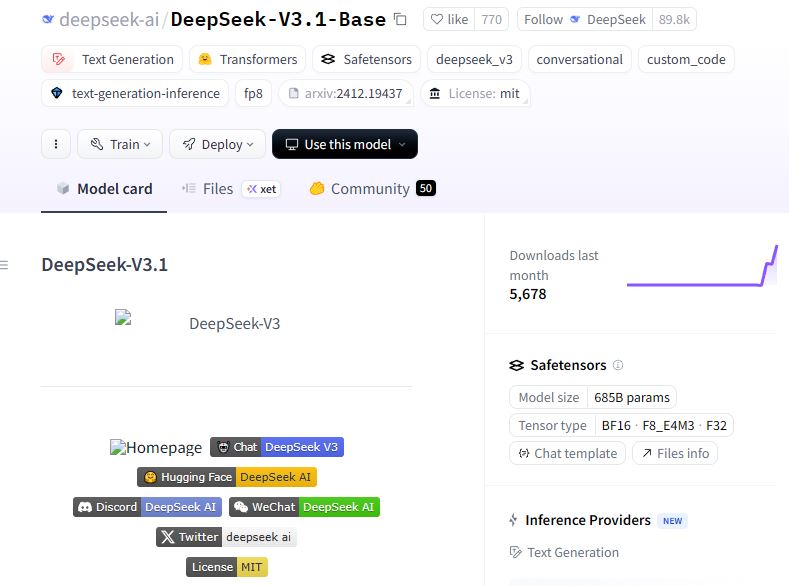

DeepSeek is still one of the best AI models around. Many of us are waiting for the R2 but DeepSeek has released a new model that gives you access to both thinking and non-thinking modes. DeepSeek V3.1 reaches answers faster than DeepSeek-R1-0528. You get 128k context for both. This model performs better than previous ones in SWE-bench and Terminal bench.

Tools & Agents Upgrades 🧰

📈 Better results on SWE / Terminal-Bench

🔍 Stronger multi-step reasoning for complex search tasks

⚡️ Big gains in thinking efficiency3/5 pic.twitter.com/qIknYSxfGd

— DeepSeek (@deepseek_ai) August 21, 2025

You will be able to build smart AI apps through DeepSeek’s API with usage cost of 7 cents per 1M tokens with cache hit and $0.56 with cache miss. The output price is $1.68 for 1m tokens. Both models have 128K context.

[HT]