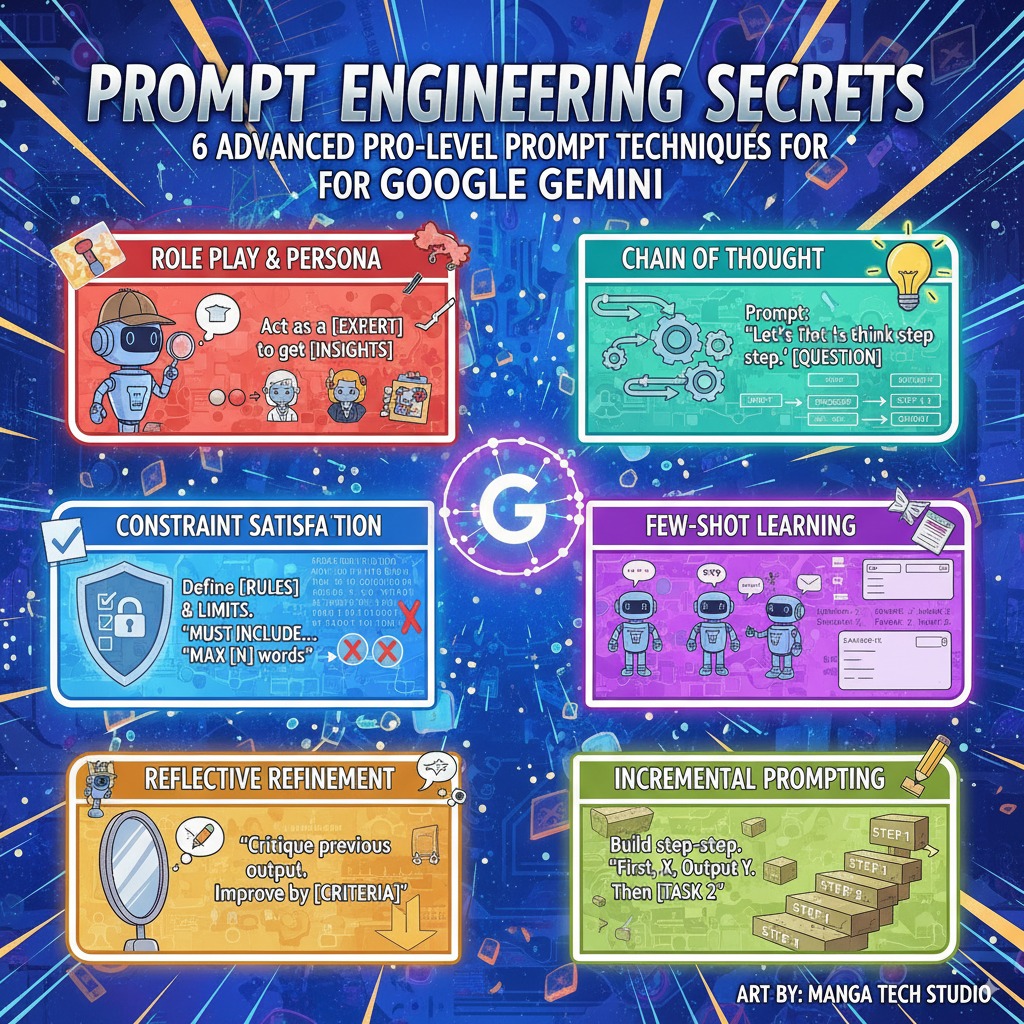

Google Gemini is one of the most powerful LLMs around. It is more than capable of generating decent results but you will have even more success with more advanced prompting techniques, such as few-shot learning and incremental prompting. You can always improve these models by going step by step and giving feedback to refine results.

A lot of new models already use chain of thought technique to produce more accurate results. With few-shot learning, you can guide the model to produce results that you expect. This infographic covers a few tips on Google Gemini prompt engineering techniques. Here is how these work:

- Role Play & Persona: give a specific role or persona (e.g., historian, chef, teacher) to the AI, guiding its responses through the lens of that identity.

- Chain of Thought: ask AI to simulate human-like reasoning, breaking down problems into logical steps.

- Constraint Satisfaction: set explicit limits or requirements on the output. E.g. under 100 words

- Few Shot Learning: provide input-output examples to demonstrate the desired format or style.

- Refinement: improve outputs by critiquing AI.

- Incremental: break down complex tasks into smaller tasks and using prior outputs as inputs for subsequent steps.