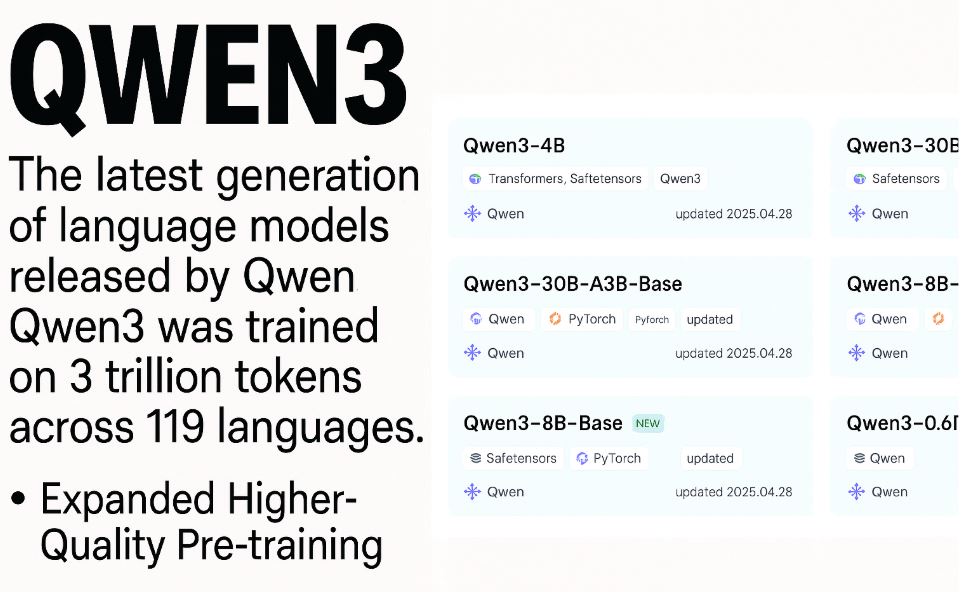

As we covered yesterday, Qwen3’s release was imminent. Alibaba finally dropped it yesterday. The Qwen3-235B-A22B is competitive against DeepSeek R1, o1, Grok 3, and Gemini 2.5 Pro. The Qwen3-30B-A3B is a smaller MoE model that outperforms QwQ-32B with 10 times of activated parameters. You can use this with Ollama, LMStudio, MLX, or llama.cpp.

Introducing Qwen3!

We release and open-weight Qwen3, our latest large language models, including 2 MoE models and 6 dense models, ranging from 0.6B to 235B. Our flagship model, Qwen3-235B-A22B, achieves competitive results in benchmark evaluations of coding, math, general… pic.twitter.com/JWZkJeHWhC

— Qwen (@Alibaba_Qwen) April 28, 2025

Qwen3 supports 119 languages. It is pretrained on 36 trillion tokens. Qwen3 has a thinking mode that lets it tackle complex problems that require deeper though. The non-thinking mode gives you near instant responses. Qwen3 has improved support for MCP. You can already access this through the chat interface.

[HT]