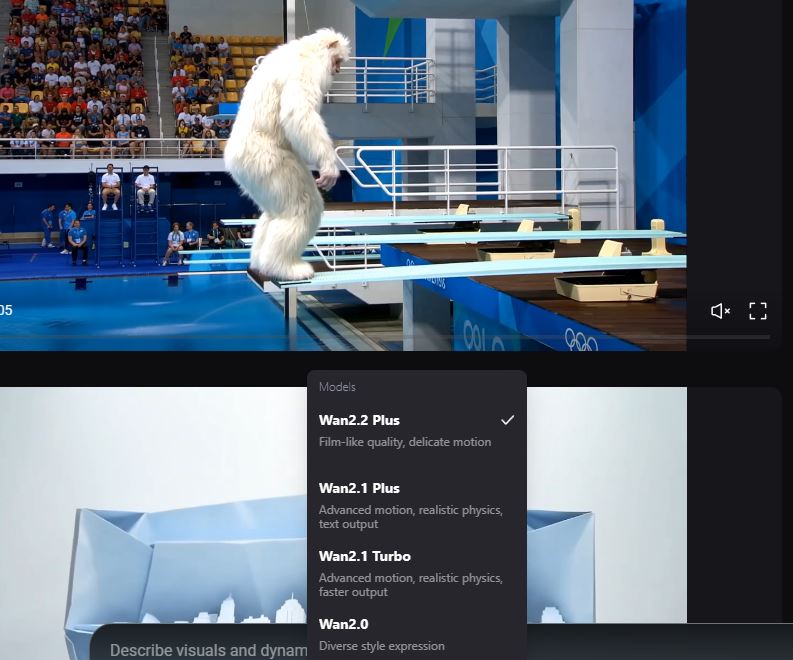

When it comes to video models, open source ones are catching up to private models quickly. Take Wan2.2 for instance: it is a multimodal generative model with text, image and text + image to video capability. In comparison to the previous model, Wan2.2 is trained on a significantly larger data, with +65.6% more images and +83.2% more videos. You can change lighting, camera angle, shot type, and a lot more.

Its hybrid Hybrid TI2V model can run on consumer-grade graphics cards like RTX 4090. This model supports both text and image to video generation at 720p @ 24fps. You can try it here.