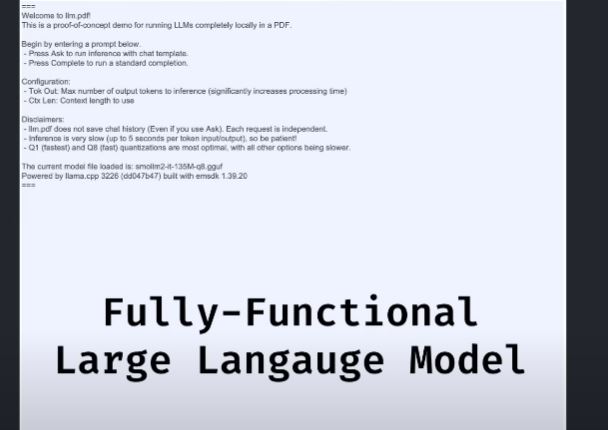

We have all seen LLMs that can be run locally. Did you know that it is possible to run an entire large language model in a PDF file? llm.pdf demonstrates how that can be done. It “uses Emscripten to compile llama.cpp into asm.js, which can then be run in the PDF using an old PDF JS injection.” You can see it in action here:

This PDF runs AI

You will just have a simple command to load a custom model in your PDF file. Only GGUF models are compatible with this. As explained on GitHub, 135M parameter models take around 5s per token input/output.

[HT]